CS 180 Project: Diffusion Models and Image Processing

Table of Contents

Part A: Implementation and Analysis

- Part 0: Setup

- Part 1: Sampling Loops

- 1.1 Implementing the Forward Process

- 1.2 Classical Denoising

- 1.3 One-Step Denoising

- 1.4 Iterative Denoising

- 1.5 Diffusion Model Sampling

- 1.6 Classifier-Free Guidance (CFG)

- 1.7 Image-to-image Translation

- 1.7.1 Editing Hand-Drawn and Web Images

- 1.7.2 Inpainting

- 1.7.3 Text-Conditional Image-to-image Translation

- 1.8 Visual Anagrams

- 1.9 Hybrid Images

Part B: Model Training and Development

- Part 1: Training a Single-Step Denoising UNet

- Part 2: Training a Diffusion Model

- 2.1 Adding Time Conditioning to UNet

- 2.2 Training the UNet

- 2.3 Sampling from the UNet

- 2.4 Adding Class-Conditioning to UNet

- 2.5 Sampling from the Class-Conditioned UNet

- Bells and Whistles

Part 0: Setup

Throughout this notebook, I used a random seed of 24. As I increased the number of inference steps, I thought that the quality of the images got better. In particular, the level of realism in the second two images (man and rocket) was definitely proportional to number of inference steps. The more complex prompt of an oil painting of a snowy village fared a bit differently. #TODO

Part 1: Sampling Loops

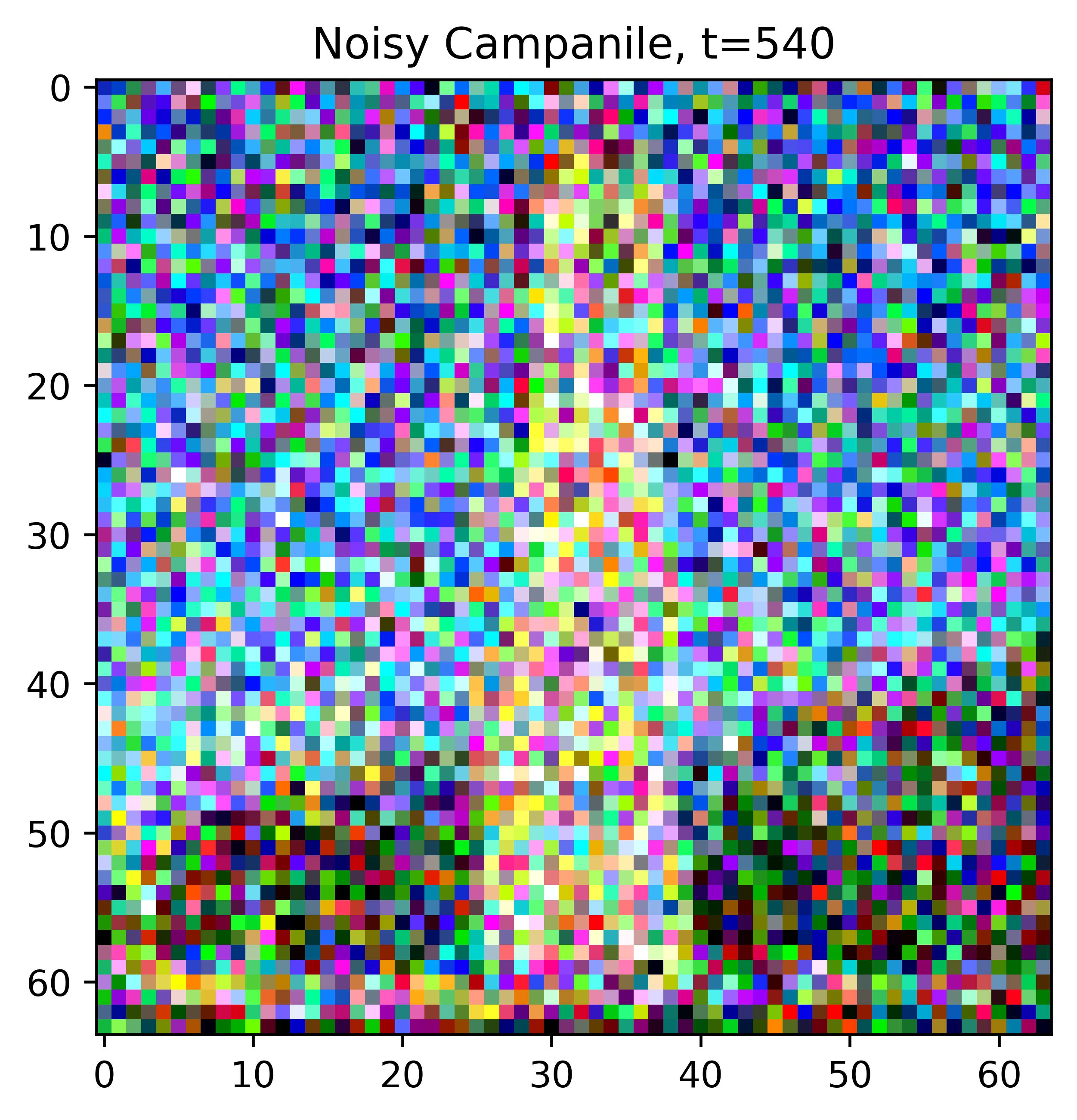

1.1 Implementing the Forward Process

1.2 Classical Denoising

1.3 One-Step Denoising

1.4 Iterative Denoising

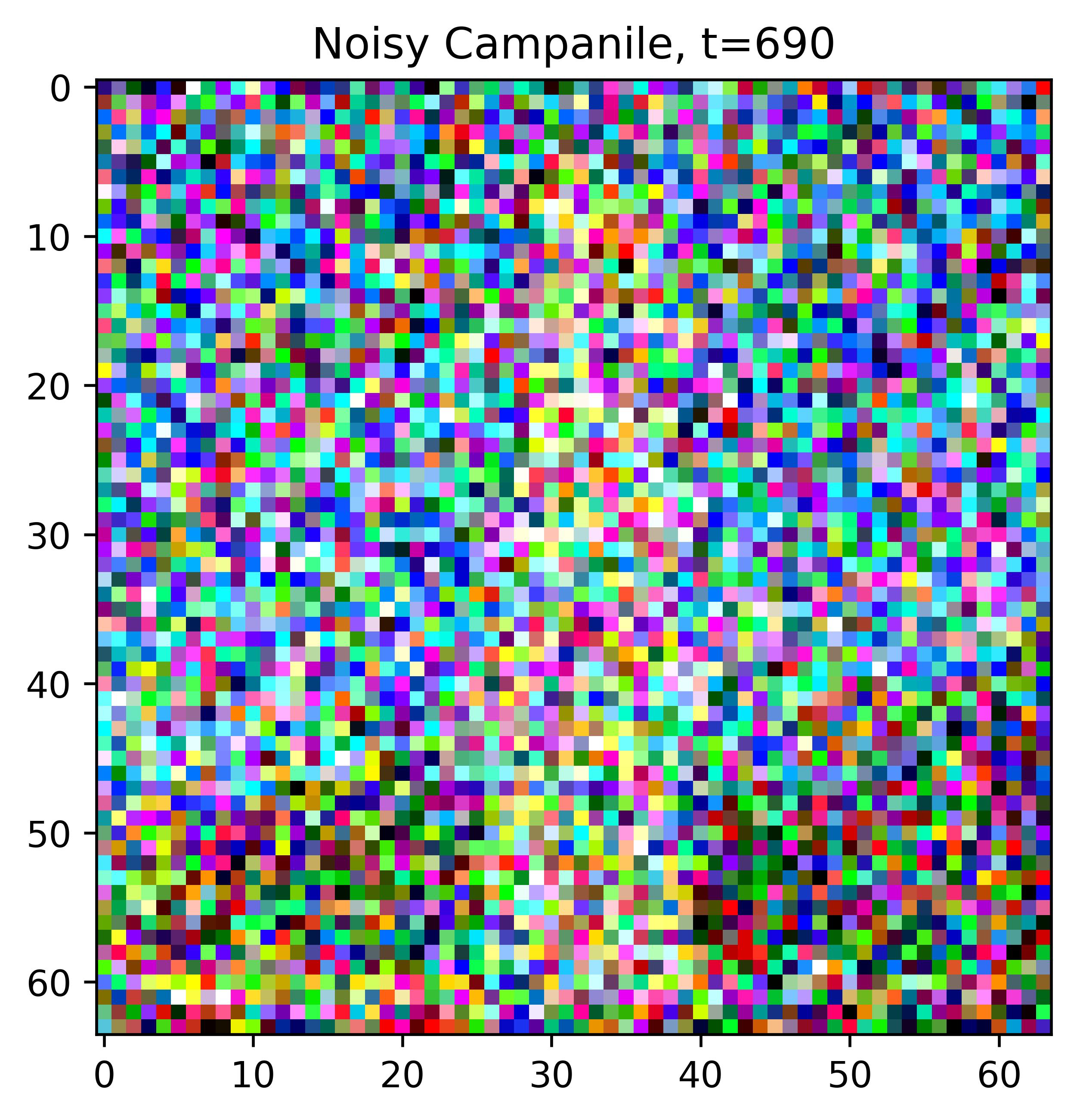

1.5 Diffusion Model Sampling

1.6 Classifier-Free Guidance (CFG)

1.7 Image-to-image Translation

1.7.1 Editing Hand-Drawn and Web Images

1.7.2 Inpainting

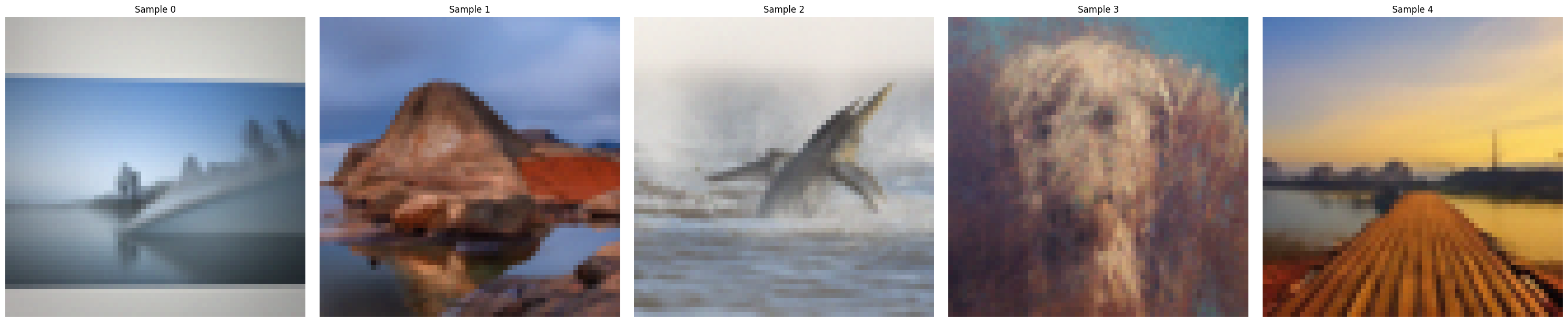

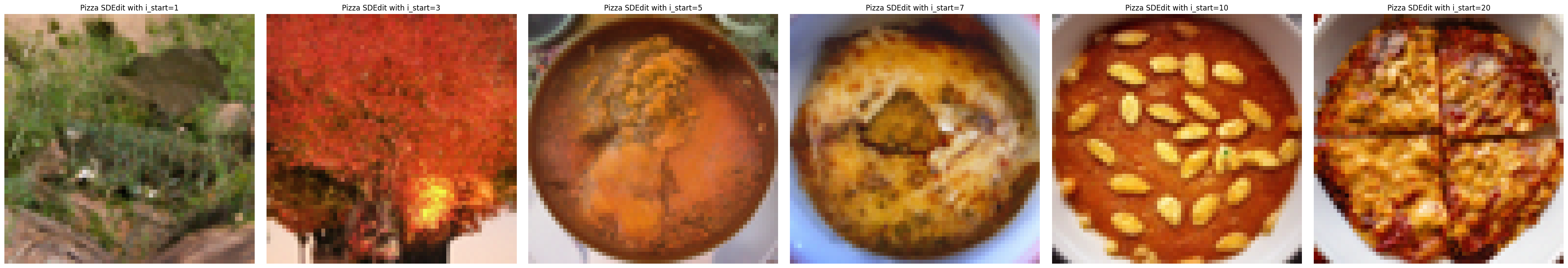

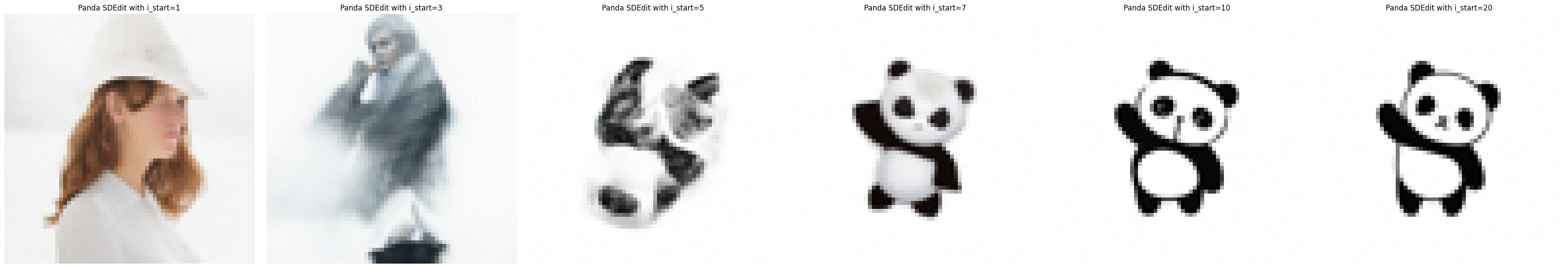

1.7.3 Text-Conditional Image-to-image Translation

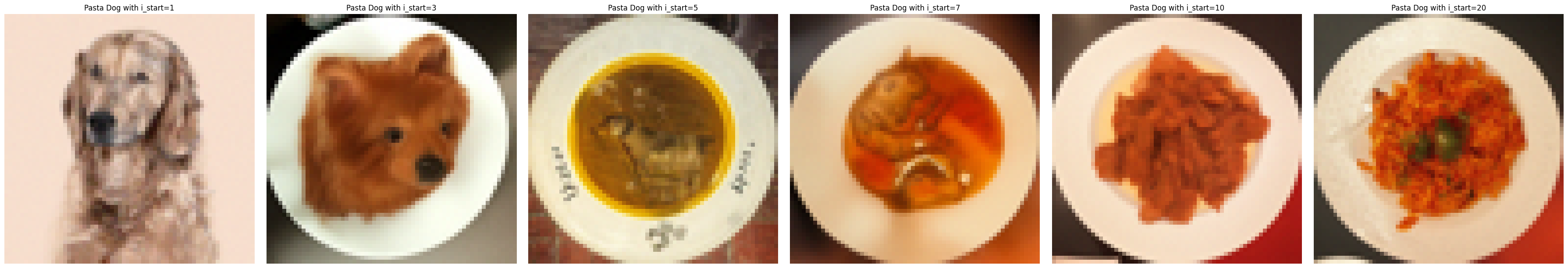

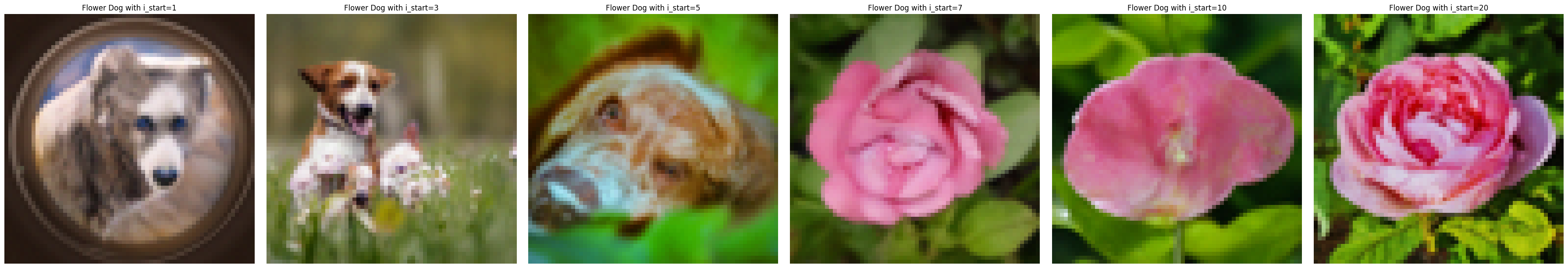

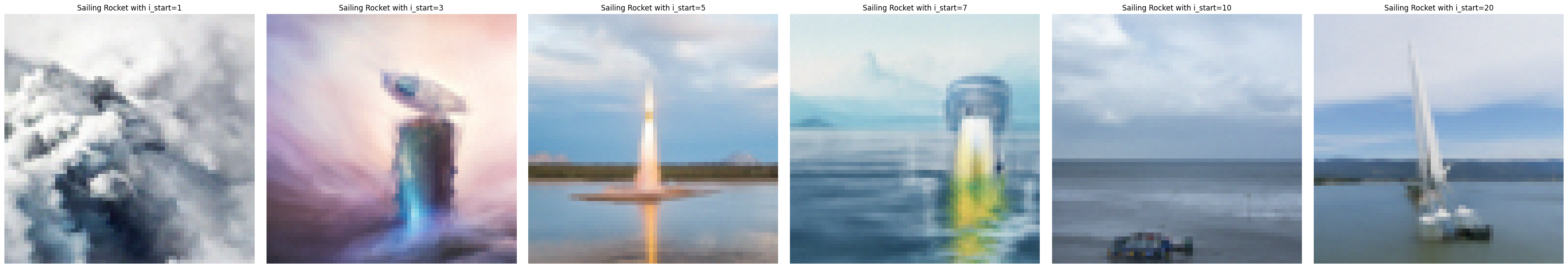

For campanile and the last sailing picture I took, I used the prompt "a rocket ship" and for the rest I used "a photo of a dog" to control the generation.

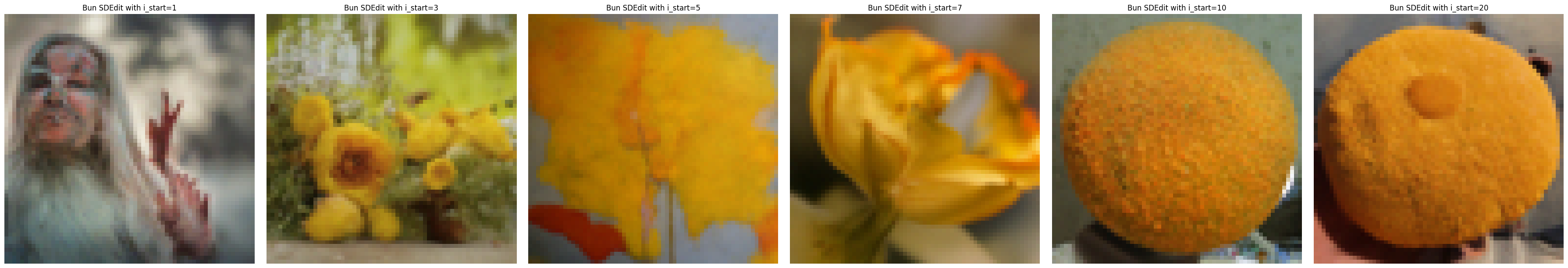

1.8 Visual Anagrams

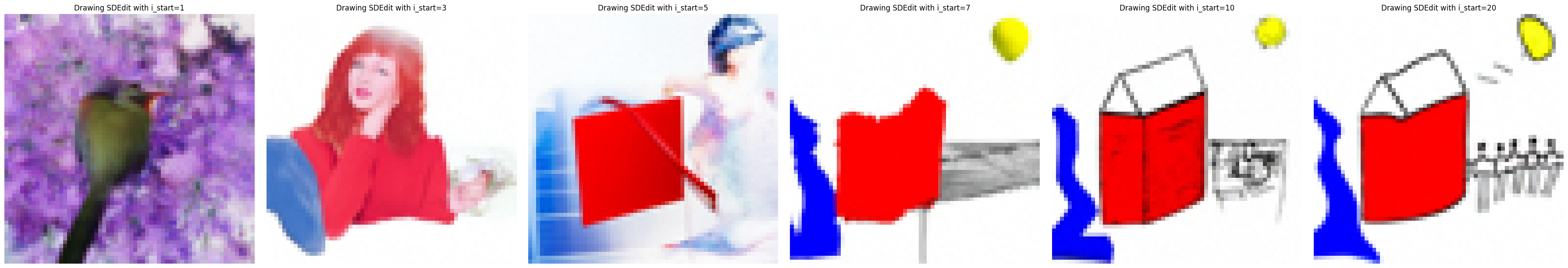

The first two pictures were the best visual anagrams I got when combining the prompts, "an oil painting of an old man" and "an oil painting of people around a campfire". The 3rd picture I have included for fun because the anagram is fully right side up - I think both aspects of the picture are quite clear and meld together surprisingly well.

I chose to incorporate dogs into sceneries after playing around with some other combinations. Making anagrams combining two sceneries sometimes proved to be difficult, as did combining weaker prompts (eg. a photo of a man, a photo of a hipster barista) with landscapes that tended to dominate. The ones below were the best as the dogs are strikingly clear, especially the second one. For the third bonus anagram, it honestly felt like both the coast and rocket were quite prominent even without flipping it over so I chose to include it.

1.9 Hybrid Images

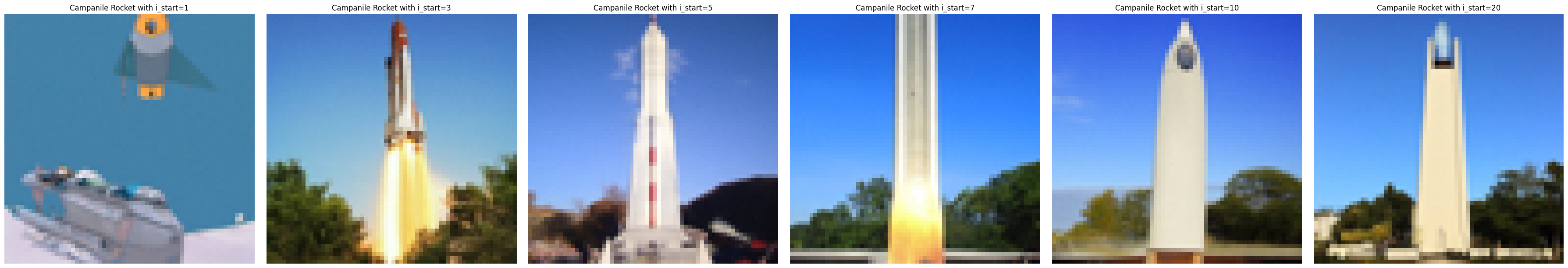

I experimented with many different combinations and picked out what I thought worked best.

Part 1: Training a Single-Step Denoising UNet

1.1 Implementing the UNet

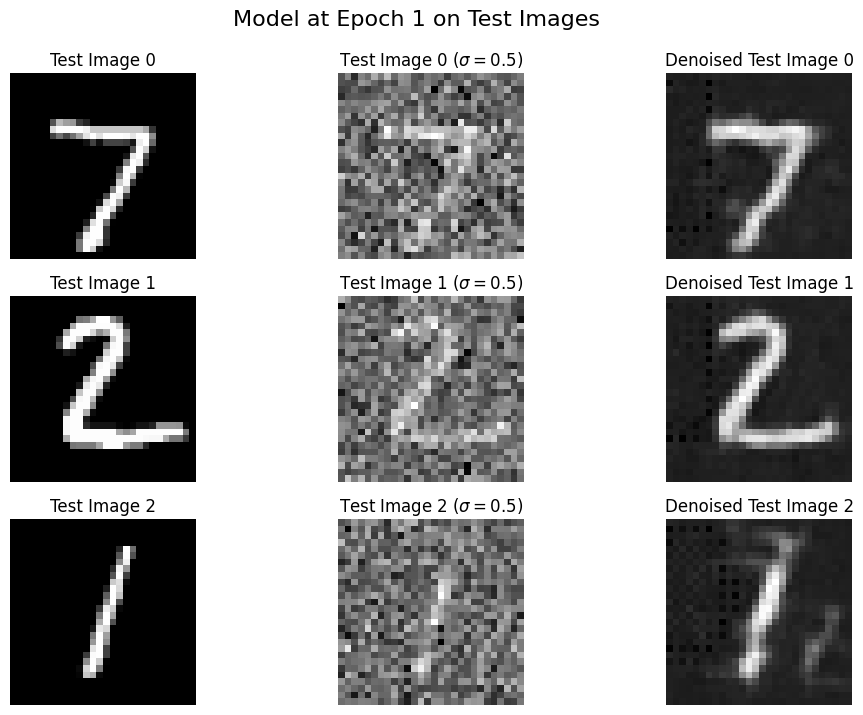

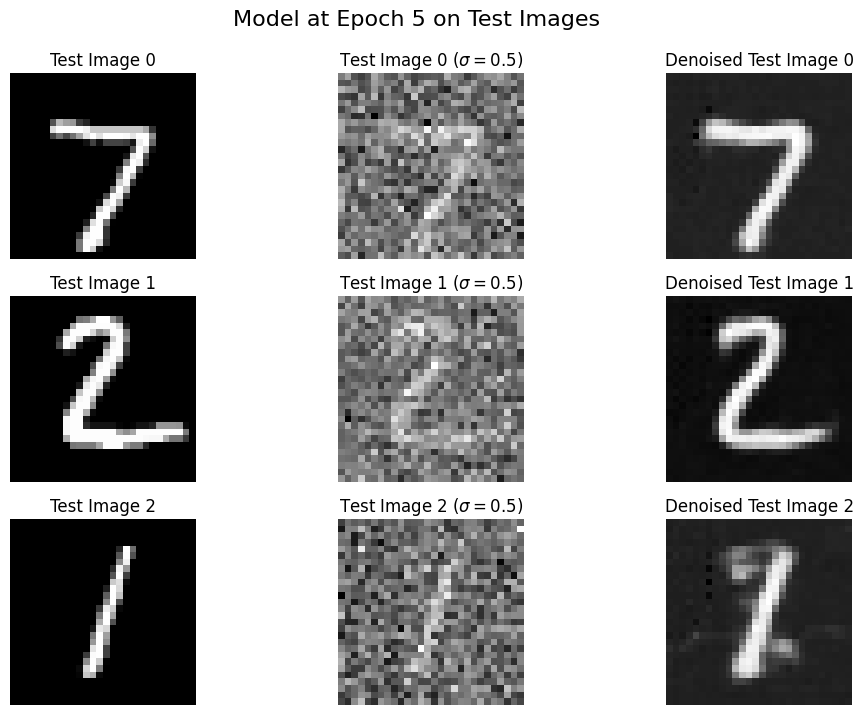

1.2 Using the UNet to Train a Denoiser

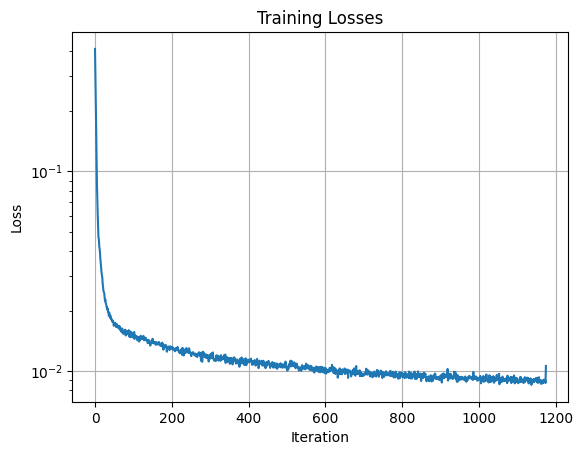

1.2.1 Training

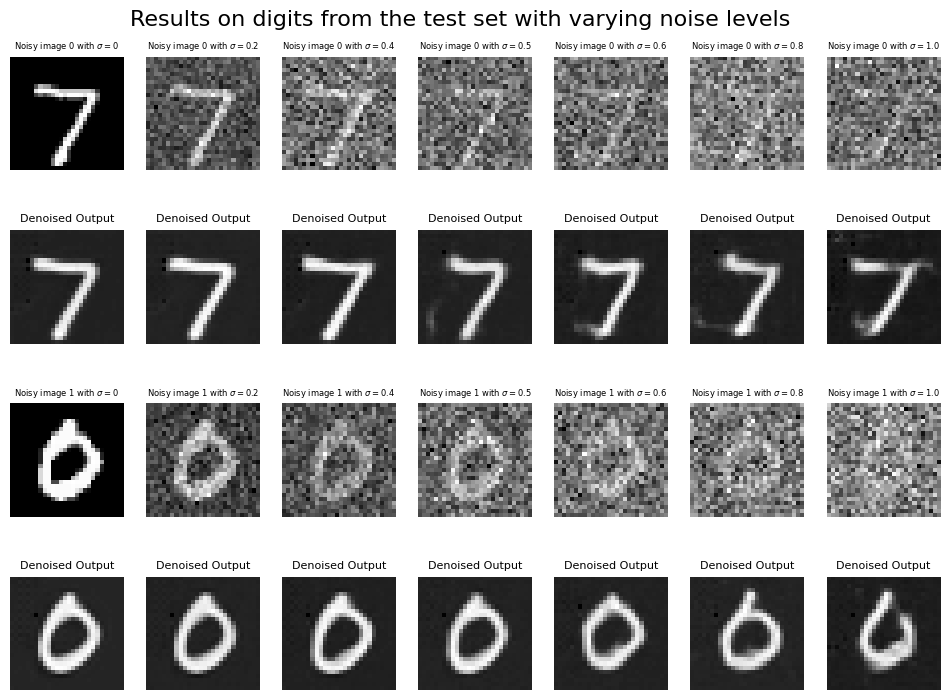

1.2.2 Out-of-Distribution Testing

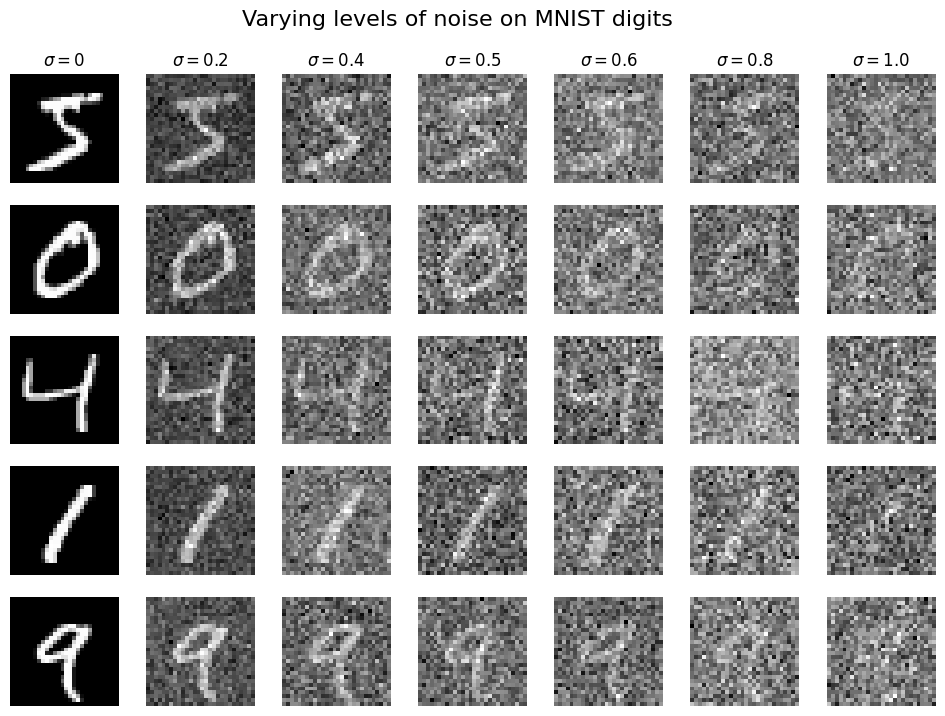

We only trained our model using sigma=0.5, here we test ow it performs on different noise levels between 0 and 1. Note how it performs reasonably well for everything up until sigma=1.

Part 2: Training a Diffusion Model

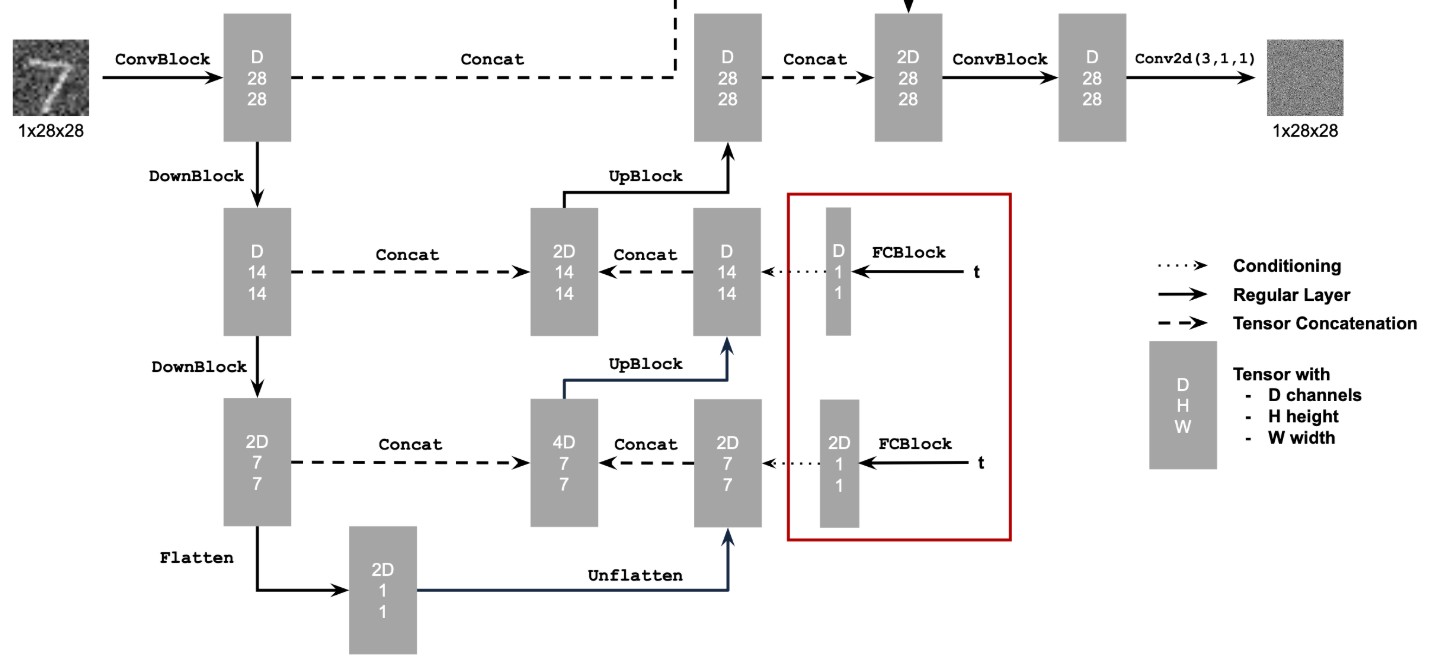

2.1 Adding Time Conditioning to UNet

2.2 Training the UNet

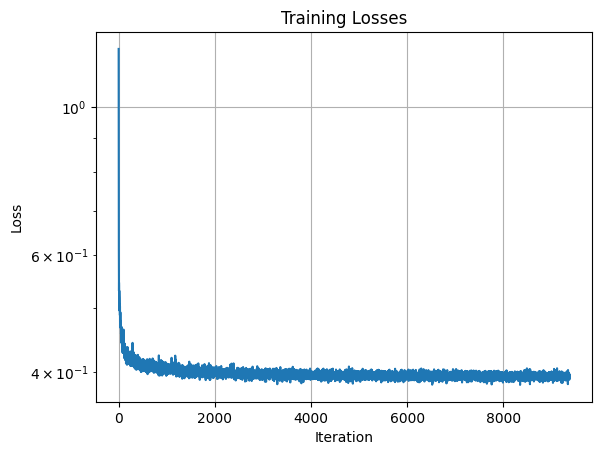

Here, I accidentally ran my model on twice as many epochs as needed (20), resulting in ~8000 iterations. You can observe we tend to converge earlier on itself.

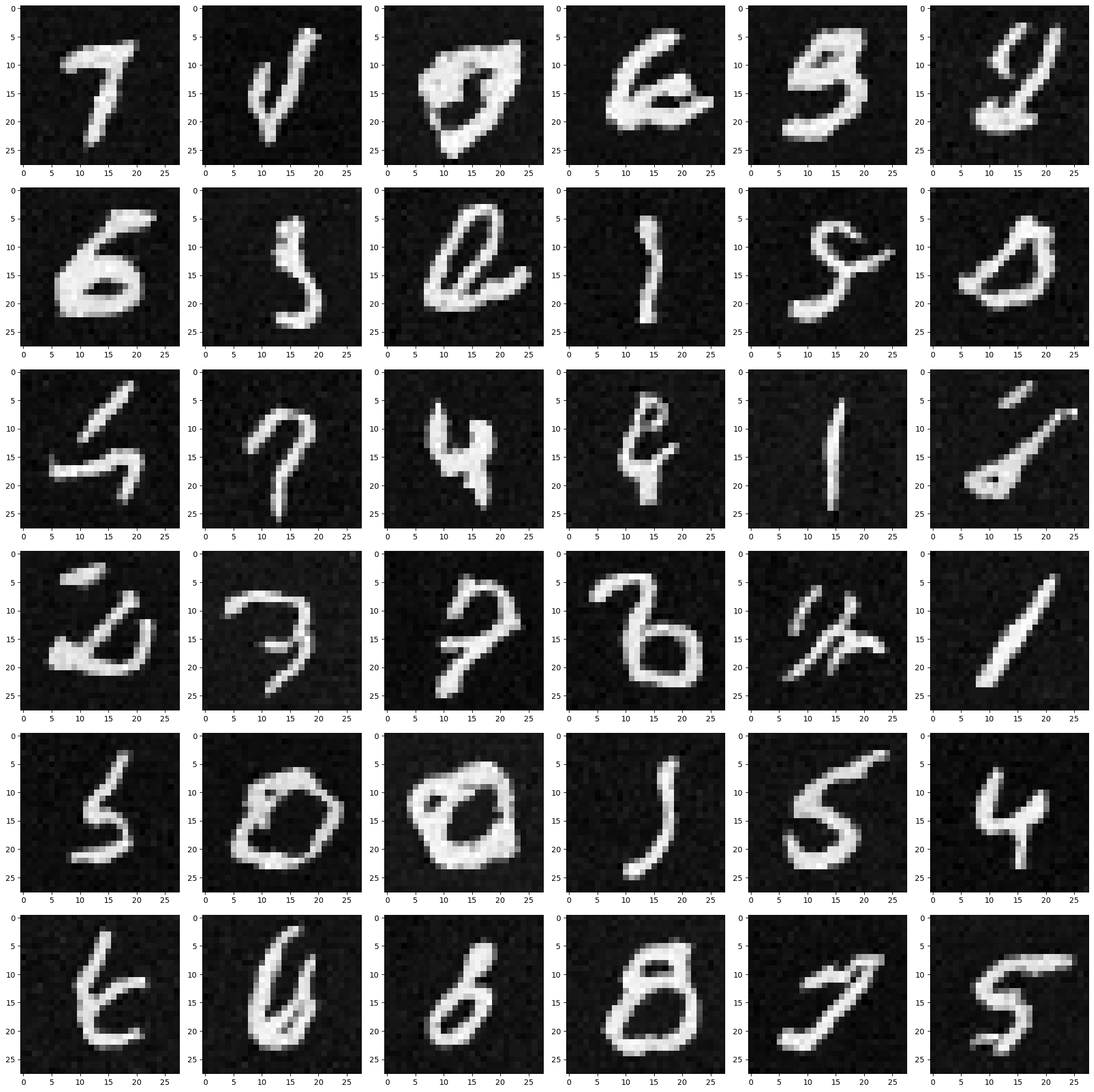

2.3 Sampling from the UNet

Now I sample from my time-conditioned unet at epoch 5 and 20. Without controlling for class, the results don't neatly look like any number for the most part, but it's a useful proof of concept.

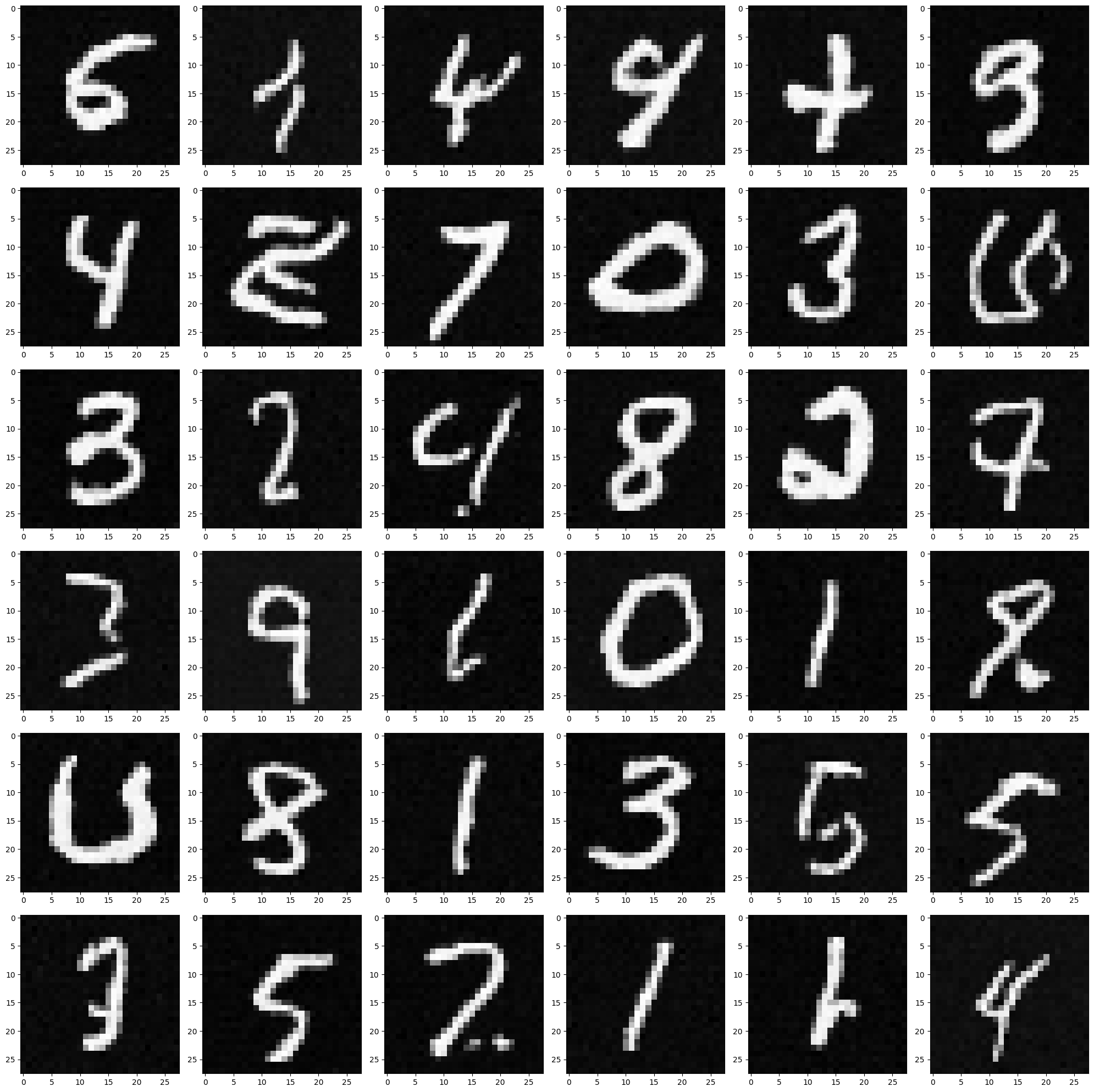

2.4 Adding Class-Conditioning to UNet

It was at this point that I ran out of GPU credit, and my class conditioned model didn't fare too well.